The Pursuit of Truth and an Educated Mind: A Conversation with Dr. Hardin (Part 2)

March 2, 2023

Is an educational apocalypse nigh? As technology develops, educators have expressed concern over the AI revolution, penning premature obituaries for everything from the modern English essay to traditional modes of art.

Dr. Hardin disagrees. He believes that AI will invigorate education, bolstering the value of critical and independent thinking instead of diminishing it.

In Part 2 of Hanabi’s conversation with Dr. Hardin, we discuss the overarching lines being redrawn in this new technological era—those dividing truth from lies, the defiant from the submissive, and the independent from the dependent.

Read Part 1 and Part 3 on Hanabi.

I found interesting the philosophical contrast you delineated between traditional education, and an education that better prepares students for a future in a fast-moving society. Relating to this, I read about a group of teachers that believes that we should learn to not compete, but collaborate with this tool. A lot of people have been thinking about how best to train students so that they’re asking the right questions to be able to utilize this great tool to the fullest — what do you think about this shift in mindset?

Absolutely — ChatGPT is a pretty useless tool if you don’t know how to ask the right question. But we, as human beings, have to be able to do more than just phrase the question right.

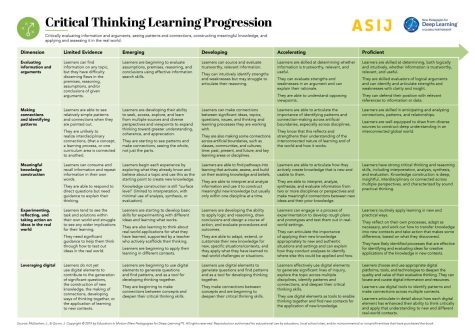

What I fear is that right now, we are at a point with ChatGPT where we trust whatever it prints. It’s magical. One of the biggest reasons I believe in the work I do is because I look around the world. I see societies and large groups of people being taken advantage of — sometimes by political leaders, and other times by commercial forces that are trying to get us to drink soft drinks or go to a particular movie. Through these Learning Progressions (pictured below), I wanted to create the ability to think, discern, assess, analyze, and evaluate, and go beyond simply asking: what is ChatGPT telling me I should have for dinner tonight? There’s a level of independence and autonomy that I think is fundamental to being a human being.

The Learning Progression chart for the Critical Learning competency. Each chart is tailored around a specific Portrait of a Learner competency.

So beyond just asking the right question, we have to ask the question: why do I know that’s true? Of the 8 billion people on the planet, many of them will be happy to accept this potentially alternative truth — whatever comes out of ChatGPT — which is frightening to me.

That’s incredibly powerful — I love what you highlighted in there. That has me thinking of an FDR quote pertaining to how democracies crumble if their citizens aren’t educated, as well as how totalitarian regimes throughout history have utilized methods of propaganda. And to your point about our ability to discern and assess information, I’m reminded of a recent episode of The Ezra Klein Show that explored the many deficiencies of AI and how these algorithms have no conception of truth whatsoever.

That’s exactly what frightens me. How do we know what we believe, or what we trust? And at what point do you say, “I think I’m being manipulated” or “I think I’m being taken advantage of”? That ability to help our students become independent and thoughtful — interrogators of their own understanding and truth — I think is one of the most important things that schools can do. And it’s always been important, but it’s come from super important to fundamentally important. So, in some ways, that’s the most important thing that a school can do, because a lot of people will just be sheep: they will follow whatever they know and look for the least difficult options. I think that’s a huge problem.

As I see these massive developments in AI unfold before my eyes, I fear that we may potentially lose — or have already lost — a collective truth because of the influx of sheer information pouring into these systems every second. This could lead to sectarianism. More polarization.

Another fear I have, especially for students that are starting to depend on ChatGPT and are viewing it as the arbiter of truth, is if this technology becomes commodified. A target for advertisers and political campaigns.

That’s absolutely one of the things that frightens me most: a powerful tool used for commercial purposes at the end of the day. ChatGPT seems like this wonderful, noble tool that anyone can use for free right now, right? But I guarantee there are plenty of people trying to figure out how to use it for profit — to use the tool for something else. And getting people not to be gullible and getting people not to be sheep — that’s not so easy.

Absolutely. Especially when the platform [ChatGPT] first was launched, I’ve definitely been guilty of being blinded by my sheer wonder and amazement from really thinking critically about what it was producing.

That’s exactly why I feel that I will be a failure as a leader if the only way we take in ChatGPT and so on is to think about how best we stop kids from cheating. Yes, that’s part of the equation. But it really is thinking deeper about: how do we teach our students not to be gullible? How do we teach them not to be willing to believe or simply accept anything that artificial intelligence generates or produces? As a school, we want to ensure that we are doing enough to make sure that students can be independent in this world where there are a lot of influences that encourage dependence.